Cleaning Up PostgreSQL Bloat

As a followup to my previous post on checking for bloat, I figured I’d share some methods for actually cleaning up bloat once you find it. I’ll also be providing some updates on the script I wrote due to issues I encountered and thanks to user feedback from people that have used it already.

First, as these examples will show, the most important thing you need to clean up bloat is extra disk space. This means it is critically important to monitor your disk space usage if bloat turns out to be an issue for you. And if your database is of any reasonably large size, and you regularly do updates & deletes, bloat will be an issue at some point. I’d say a goal is to always try and stay below 75% disk usage either by archiving and/or pruning old data that’s no longer needed. Or simply adding more disk space or migrating to new hardware all together. Having less 25% free can put you in a precarious situation where you may have a whole lot of disk space you can free up, but not enough room to actually do any cleanup at all or without possibly impacting performance in big ways (Ex. You have to drop & recreate a bloated index instead of rebuilding it concurrently, making previously fast queries extremely slow).

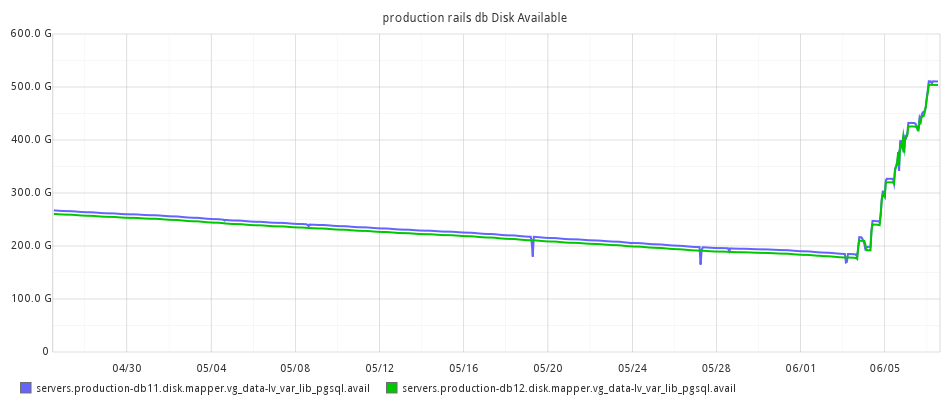

The above graph (y-axis terabytes) shows my recent adventures in bloat cleanup after using this new scan, and validates that what is reported by pg_bloat_check.py is actually bloat. In both this graph and the one below, there were no data purges going on and each of the significant line changes coincided exactly with a bloat cleanup session. You can see back on May 26-27th a huge drop in size. You can see an initial tiny drop followed by a fairly big increase then the huge drop. This is me first fixing one small, but very bloated index followed by running a pg_repack to take care of both table and a lot of index bloat. This is actually the group_members table I used as the example in my previous post. Over the next week or so I worked through roughly 80 bloated objects to recover about 270GB of disk space. Now, it may turn out that some of these objects will have their bloat return to their previous values quickly again and those could be candidates for exclusion from the regular report. But I figured I’d go through everything wasting more than a few hundred MB just so I can better assess what the actual normal bloat level of this database is. Here’s another example from another client that hadn’t really had any bloat monitoring in place at all before (that I was aware of anyway). It’s showing disk space available instead of total usage, hence the line going the opposite direction, and db12 is a slave of db11.

The easiest, but most intrusive, bloat removal method is to just run a VACUUM FULL on the given table. This will take an exclusive lock on the table (blocks all reads and writes) and completely rebuild the table to new underlying files on disk. This clears out 100% of the bloat in both the table and all indexes it contains at the expense of blocking all access for the duration. If you can afford the outage, it’s the easiest, most reliable method available. For very small tables this is likely your best option.

The next option is to use the REINDEX command. This can be run on several levels: INDEX, TABLE, DATABASE. 9.5 introduced the SCHEMA level as well. Running it on the TABLE level has the same consequence of likely locking the entire table for the duration, so if you’re going that route, you might as well just run a VACUUM FULL. Same for running at the DATABASE level, although if you’re running 9.5+, it did introduce parallel vacuuming to the vacuumdb console command, which would be much more efficient. When running on the INDEX level, things are a little more flexible. All writes are blocked to the table, but if a read-only query does not hit the index that you’re rebuilding, that is not blocked. If you can afford several shorter outages on a given table, or the index is rather small, this is the best route to take for bloat cleanup.

If you’ve got tables that can’t really afford long outages, then things start getting tricky. Before getting into pg_repack, I’d like to share some methods that can be used without third-party tools. Index bloat is the most common occurrence, so I’ll start with that.

If you’ve just got a plain old index (b-tree, gin or gist), there’s a combination of 3 commands that can clear up bloat with minimal downtime (depending on database activity). The CONCURRENTLY flag to the CREATE INDEX command allows an index to be built without blocking any reads or writes to the table. So say we had this bloated index

objectname | objecttype | dead_tuples | free_space | free_percent

index_guests_on_state | index | 0 bytes | 9460 MB | 60.04

No dead tuples (so autovacuum is running efficiently) and 60% of the total index is free space that can be reclaimed. A handy command to get the definition of an index is pg_get_indexdef(regclass). In this case it’s a very easy index definition, but when you start getting into some really complicated functional or partial indexes, having a definition you can copy-n-paste is a lot safer.

# SELECT pg_get_indexdef('index_guests_on_state'::regclass);

pg_get_indexdef

------------------------------------------------------------------

CREATE INDEX index_guests_on_state ON guests USING btree (state)

Now we can write our set of commands to rebuild the index

CREATE INDEX CONCURRENTLY index_guests_on_state_new ON guests USING btree (state);

ANALYZE guests;

DROP INDEX CONCURRENTLY index_guests_on_state;

ANALYZE guests;

ALTER INDEX index_guests_on_state_new RENAME TO index_guests_on_state;

I threw the ANALYZE calls in there just to ensure that the catalogs are up to date for any queries coming in during this rebuild. May not really be necessary, but I was doing this on a very busy table, so I’d rather be paranoid about it. Neither the CREATE nor the DROP command will block any other sessions that happen to come in while this is running. However, that final ALTER INDEX call can block other sessions coming in that try to use the given table. But the rename is optional and can be done at any time later. After the DROP command, your bloat has been cleaned up. While concurrent index creation does not block, there are some caveats with it, the major one being it can take much longer to rebuild the index. One of these for the second client above took 4.5 hours to complete. The documentation on building indexes concurrently goes into more detail on this, and how to deal with it possibly failing.

If you’re running this on a UNIQUE index, you may run into an issue if it was created as a UNIQUE CONSTRAINT vs a UNIQUE INDEX. Functionally, both are the same as far as PostgreSQL is concerned. And under the hood, creating a unique constraint will just create a unique index anyway. The big difference is you will not be able to drop a unique constraint concurrently. You will have to do an ALTER TABLE [..]. DROP CONSTRAINT […] call, which will require an exclusive lock, just like the RENAME above. Also, the index is more flexible since you can make a partial unique index as well. So it’s better to just make a unique index vs a constraint if possible.

PRIMARY KEYs are another special case. Functionally, they’re no different than a unique index with a NOT NULL constraint on the column. But they are marked specially in the catalog and some applications specifically look for them. You can do something very similar to the above, taking advantage of the USING clause to the ADD PRIMARY KEY command.

postgres@production=# CREATE UNIQUE INDEX CONCURRENTLY email_records_pkey_new ON public.email_records USING btree (id);

CREATE INDEX

Time: 2794263.993 ms

postgres@production=# ANALYZE public.email_records;

ANALYZE

Time: 1658.590 ms

postgres@production=# ALTER TABLE public.email_records DROP CONSTRAINT email_records_pkey;

ALTER TABLE

Time: 43.774 ms

postgres@production=# ANALYZE public.email_records;

ANALYZE

Time: 1518.819 ms

postgres@production=# ALTER INDEX public.email_records_pkey_new RENAME TO email_records_pkey;

ALTER INDEX

Time: 0.586 ms

postgres@production=# ALTER TABLE public.email_records ADD PRIMARY KEY USING INDEX email_records_pkey;

ALTER TABLE

Time: 13.219 ms

postgres@production=# ANALYZE public.email_records;

ANALYZE

Time: 1611.376 ms

I gave full command examples here so you can see the runtimes involved. The concurrent index creation took quite a while (about 46 minutes), but everything besides the analyze commands was sub-second. Giving the command to create a primary key an already existing unique index to use allows it to skip the creation and validation usually done with that command.

As always, there are caveats to this. If the primary key, or any unique index for that matter, has any FOREIGN KEY references to it, you will not be able to drop that index without first dropping the foreign key(s). If there’s only 1 or 2 of those, you can likely do this in a transaction surrounding the drop & recreation of the primary key with commands that also drop and recreate the foreign keys. But if you start getting more in there, that’s just taking a longer and longer outage for the foreign key validation which will lock all tables involved. And also increasing the likelyhood of an error in the DDL you’re writing to manage recreating everything. In that case, it may just be better to take the outage to rebuild the primary key with the REINDEX command.

In all cases where I can use the above methods, I always try to use those first. They’re the native methods built into the database and, as long as you don’t typo the DDL commands, not likely to be prone to any issues cropping up later down the road. And since index bloat is primarily where I see the worst problems, it solves most cases (the second graph above was all index bloat). If you’re unable to use any of them, though, the pg_repack tool is very handy for removing table bloat or handling situations with very busy or complicated tables that cannot take extended outages. It’s gotten pretty stable over the last year or so, but just seeing some of the bugs that were encountered with it previously, I use it as a last resort for bloat removal. Also, if you’re running low on disk space, you may not have enough room for pg_repack since it requires rebuilding the entire table and all indexes in secondary tables before it can remove the original bloated table. As I said above, I did use it where you see that initial huge drop in disk space on the first graph, but before that there was a rather large spike to get there. In that case, the table had many, many foreign keys & triggers and was a very busy table, so it was easier to let pg_repack handle it.

For table bloat, Depesz wrote some blog posts a while ago that are still relevant with some interesting methods of moving data around on disk. This can also be handy when you are very low on disk space.

Bloat Removal Without Table Swapping

Bloat Removal By Tuples Moving

Since I initially wrote my blog post, I’ve had some great feedback from people using pg_bloat_check.py already. I’ve gotten several bugs fixed as well as adding some new features with version 2.1.0 being the latest available as of this blog post. json is now the preferred, structured output method if you need to see more details outside of querying the stats table in the database. I also added some additional options with –exclude_object_file that allows for more fine grained filtering when you want to ignore certain objects in the regular report, but not forever in case they get out of hand. I updated the README with some examples of that since it’s a little more complex.

I also made note of the fact that this script isn’t something that’s made for real-time monitoring of bloat status. Since it’s doing full scans on both tables and indexes, this has the potential to force data out of shared buffers. So if you keep running it often, you may affect query performance of things that rely on data being readily available there. It’s best to run it maybe once a month or once a week at most during off-peak hours. If you have particularly troublesome tables you want to keep an eye on more regularly, the –tablename option allows you to scan just that specific table and nothing else. Once you’ve gotten the majority of your bloat issues cleaned up after your first few times running the script and see how bad things may be, bloat shouldn’t get out of hand that quickly that you need to run it that often. If it is, you may want to re-evaluate how you’re using PostgreSQL (Ex. MVCC makes it not great as a queuing system).

If anyone else has some handy tips for bloat cleanup, I’d definitely be interested in hearing them.